TL;DR: If you’re applying firmware upgrade SD1A to Seagate drives, you need to double-check the firmware actually applied properly. If the Seagate patcher doesn’t work, make sure to use Legacy mode on SATA in the BIOS, instead of the more modern AHCI mode.

So perhaps you have heard of Seagate’s little manufacturing issue with its internal 3.5-inch Barracuda 7200.11 1TB drives a while back — namely, that some drives shipping with SD15 firmware are dying horribly. I had the unfortunate experience of buying such a hard drive — the ST31000340AS — as a scratch disk for my main machine, a MacBook Pro with a mere 240 GB internal drive (a pre-unibody revision, where the HD is insanely difficult to replace).

Seagate did in fact issue a firmware update — SD1A — that supposedly addressed this issue, but of course, there’s one catch: you can’t install the firmware through an external drive enclosure. In communication with Seagate support, a representative confirmed that for those of us without a desktop tower that has a SATA bay, we’re hosed:

Unfortunately, due to the nature of firmware updates and the way external drives work, the firmware update program cannot directly communicate with the drive in the manner it needs to in order to be able to upload the new firmware to the drive. It must be plugged into an internal SATA controller in order to update the drive.

Fair enough. That makes technical sense — but of course, it doesn’t work for me. I asked whether they would handle a mail-in repair, given that I have no easy access to such a desktop. The answer, of course, is No.

I have to find a desktop, open it up, jam this baby in (possibly in place of the existing drive if there’s only one bay), update the firmware, and put everything back together. Sadly, most of my friends who still own desktops would not trust me that far.

Half a year passes, and I finally find a sucker good friend who’s gullible awesome enough for me to try this procedure on his machine. The fellow owns a nice if aging Dell Precision T5400, which comes with two SATA bays (so I don’t have to inflict undue harm onto the existing system). Since this thing can run two drives at once, I can use the first method (a Windows-based firmware updater), though I burned a boot CD for the second method just in case. I popped in the drive, fired up Windows XP, downloaded the Windows-based Firmware Update Utility, double-clicked, and thought it was the (triumphant) end. In fact, it took 3 hours of my life to find out just how deep this rabbit hole goes.

Problem 1: The lying updater

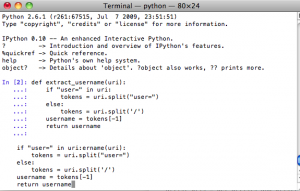

The firmware updater will give a bunch of scary warnings and then reboot the machine. It will automatically reboot to a Seagate Loader screen, which attempts to apply the patch to all eligible SATA drives. To its credit, it’ll skip the non-qualifying (i.e. non-Seagate, non-Barracuda, etc.) drives, but it’ll still try them out first. At the end of the process, it will report “firmware downloaded” and “SUCCESSFUL” or some variant thereof, and automatically reboot back into Windows.

At this point, I advise you to use the SeaTools utility to verify that the firmware update actually applied. Despite its claims, if you were on a stock setup Dell T5400 (or perhaps other models as well), this will prove that the updater is a lying scumbag. And in fact, this particular drive still reported firmware SD15, the broken one.

Problem 2: The broken Boot CD

To save both me and my gracious host (who’s starting to suspect my computer-fixin’ skills now) some time, I decided to try the boot CD method, rather than pounding my head trying to see why the updater was lying. I downloaded the boot CD from the same Seagate Support site above, burned it to disk, and tried it out.

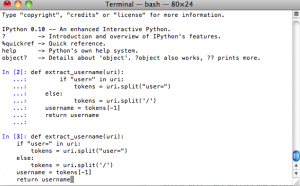

The result is a new SelfSolved posting: SelfSolved #59: getFatBlock error when upgrading Seagate Barracuda 7200.11 firmware. In essence:

The FreeDOS boot CD reports a number of ” error reading partition table drive 01 sector 0 ” errors. This is followed by ” get Fatblock failed:0x000000e8 ” or some variant of ” getFatBlock failed : ” The FreeDOS boot process appears to stall at this stage, and does not continue to the firmware flasher program.

That was lovely.

The Solution

I chased some red herrings. I came across postings about failures in various FreeDOS-based Seagate tools. One such post mentioned that it took a long time for the boot disc to get over the “error reading partition table” errors, but I waited forever (well, 15 minutes) and the boot process did appear to be frozen / stalled. I reformatted the drive via diskpart clean, thinking that the getFatBlock and error reading partition errors were related to a non-MBR partition table (I had it set to GPT). I should have realized, of course, that the errors were completely unrelated to filesystems, despite the “fat block” to which it refers.

The actual solution is deceptively simple — the boot disc & flasher appears to handle AHCI-based SATA mode badly. The Dell I was using was set to AHCI mode, out of the three possible Legacy, AHCI, and RAID options for SATA. Apparently the boot disc simply doesn’t handle this mode correctly on the Dell machine (and may also be related to why the Windows-based updater lies). When the machine switches on, use F12 to enter the boot menu, and select Setup to enter the BIOS. Then, on the list of Drive Options, skip past the SATA drives and down to SATA options. Pick the Legacy option to use ATA mode, instead of AHCI. Once this is done, the boot disc will function correctly, and the updated firmware will be applied without incident. Remember to switch the mode back to AHCI — it’s default for reason, no doubt.

The “error reading partition” messages were completely red herrings. They appear whether you are in the right SATA mode or not, and does not appear to affect the operation of the firmware updater or the boot process. It should not take very long to get to the flasher on this particular setup, so don’t wait on that message too long — it’s a good sign something’s not quite right.

In the end, I did recover my $100 hard drive, and the confidence of my peer in my mad hardware skillz (actually, quite non-existent).

Discussion

In the end, I’m quite appalled at Seagate. This sort of failure shouldn’t have happened, of course. Once it did, Seagate should have offered to take back and replace broken drives — the data I had on there was non-critical. I would have been perfectly willing to pay shipping costs to get a fixed replacement through mail-in service. I should not have been forced to search my social network for a person willing to let me tear his desktop computer apart, for a dubious and unsure firmware update procedure that fails mysteriously. I spent an additional 3 hours tracing mystery failures, for which the error messages were rather useless. Without my trusty iPhone and access to the Internet, I would not been able to solve this problem. How should I have known what “getFatBlock failed” means?

This little episode has convinced me to never buy a Seagate drive again — I simply cannot afford the time and energy for these sort of firmware upgrade adventures. While I was looking for a desktop to tear apart, I bought a Western Digital Caviar Black 1TB drive instead. Another $100, but at least I had a scratch drive for my work.

The moral of the story: Seagate, you are the worst storage vendor I’ve had to work with so far. I hope this record is not broken in the future.