Outlook 2011 on Mac OS X, v14.1.3, for whatever reason, still does not properly support “format=flowed” content-type or “quoted-printable” extensions for plaintext emails. This causes plaintext emails to be sent as mangled messes, full of arbitrarily inserted linebreaks. This appears to be a regression from Entourage, as far as I recall, which never handled plaintext quite this badly, and this is also despite Microsoft’s promises to have “implemented format=flowed”.

This is the last straw. I’ve been a loyal MS Entourage / MS Outlook user since the days of Outlook Express for Mac and Office 2001. But at this point, this software has actively impeded my communications with my friends and colleagues. We’re done.

The Problem

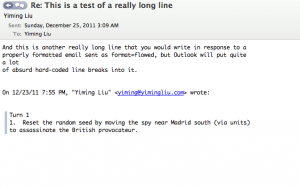

Here’s a really simple illustration of the problem, from the receiver’s end:

See how the URL, which was composed as one plaintext line, gets split up into two lines?

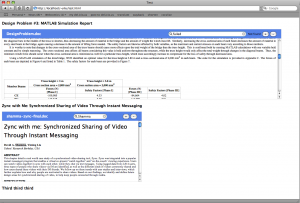

Here is another example, purely from the editor UI (and not even being sent yet). I start with a perfectly good reply saved as a draft:

I make a small wording change and resave:

See that third line? Thanks to the hard line breaks inserted by Outlook (even at composition stage), the line wrap has been mangled. This draft has to be re-wrapped manually, by the tedious process of deleting the newline-based hard line breaks from every line following in the paragraph. That was a short paragraph. Imagine doing that in a long paragraph, from the first line.

To add insult to injury, there is not even a “re-wrap” functionality in the editor, to at least solve this user-interface level problem (as opposed to the protocol level problem). Obviously no one at Microsoft sends plaintext emails anymore.

The Issue

Back when email was first devised, servers didn’t have a lot of memory, and people had pretty tiny terminals with fixed line widths and not a whole lot of processing power to deal with it. The Internet standards for email messages http://www.ietf.org/rfc/rfc2822.txt, RFC2822 Section 2.1.1, defines recommendations for email body text transferred over SMTP:

There are two limits that this standard places on the number of

characters in a line. Each line of characters MUST be no more than

998 characters, and SHOULD be no more than 78 characters, excluding

the CRLF.

The 998 character limit is due to limitations in many implementations

which send, receive, or store Internet Message Format messages that

simply cannot handle more than 998 characters on a line. Receiving

implementations would do well to handle an arbitrarily large number

of characters in a line for robustness sake…

The more conservative 78 character recommendation is to accommodate

the many implementations of user interfaces that display these

messages which may truncate, or disastrously wrap, the display of

more than 78 characters per line…

…it is encumbant upon implementations which display messages

to handle an arbitrarily large number of characters in a line

(certainly at least up to the 998 character limit) for the sake of

robustness.

Basically, the SMTP server can count on messages that come in 80 characters per line (and always less than 1000 characters per line), and email clients can trust that they only have to render up to the 78th column of text. This limitation is hardly useful in the modern age, but persists since it’s part of the standard. And it’s a fine, conservative design model. But now we write some pretty long lines without linebreaking ourselves, so something magical has to happen in the email client itself, like Outlook 2011.

The naive solution, of course, is to slap arbitrary line breaks into the user’s email message at every 78 characters, which is what ye olde email clients (looking at you, pine — how did I ever put up with you…) from yesteryears did (and Outlook 2011 still does). It’s a matter of personal preference whether this is a reasonable solution. Proponents argue that the email will “always look the same” on all devices, including those limited to 78 chars per line.

I (and many others), on the other hand, think the spirit of the RFC is to allow the actual handling client to decide where to break lines. With the exception of source code, it is almost always better for the email client to use the full width of their display, however many characters that might be. Even in the case of source code, it should also not be mangled by the insertion of arbitrary line breaks in them — what if newlines are meaningful in this language, and the author used more than 78 characters per line? The example with the URI is illustrative of this problem — the URI got an arbitrary newline in the middle, destroying its meaning. Users who copy-paste the two lines will end up getting a 404, due to that stupid inserted newline in the middle of it. This should not be allowed to happen.

Because this naive solution was not perfect, an extension was proposed as RFC 2646. This format of email is characterized by the content-type:

Content-type: text/plain; charset=US-ASCII; format=flowed

In format=flowed emails, the sending and receiving email clients are allowed to reflow the text based on user linebreaks. It follows some simple reflowing rules, but in short it will preserve user-inserted hard line breaks while adjusting the rest of the message for the proper line length while the message is “on the wire”, and recombining the lines on receipt and display. Modern email clients like Thunderbird, designed for user comfort and the generous system limitations of the year 2011, implement this standard.

Guess what format Outlook 2011 sends?

Content-type: text/plain; charset="US-ASCII"

Not even an option to change that behavior. It does not appear that Outlook 2011 deals with any of this. It just inserts some line breaks and calls it a day.

An alternative, implemented by Apple’s Mail.app, is to send messages with the Content-Transfer-Encoding header set to “quoted-printable”, as per RFC 2045. In this model, soft line breaks are sent explicitly with the character “=” representing it, breaking at the usual 70-odd character column. On the receiving end, the client processes this character as a no-op and concats the line back together for display.

Outlook doesn’t do that either. It just wants to mangle your emails.

Conclusion

The world moved on and adopted HTML emails, which doesn’t have this newline problem. For those of us who do think HTML emails are an atrocity to be used sparingly, if at all, the idiosyncrasies of plaintext email have to be addressed. Outlook 2011 appears to do even worse than Entourage 2008 at this problem, by not dealing with it at all. And apparently getting a bunch of Microsoft “MVPs” on their forums to cloud the issue with promises of support and unrelated commentary.

Given the sad state of email clients on the Mac, I believe Thunderbird is now my only option for sane plaintext messaging.